With TJ Byers

Power Supply Design 101

Question:

Is there a formula to determine the size of the output filter capacitor in a DC power supply? I find many different sizes in different DC power sources, and would like to know what determines their size.

Ted Asousa

via Internet

Answer:

The simple answer is: capacitance is used to reduce the amount of ripple voltage that is ever present on a DC power supply output. Unfortunately, the formula isn't as simple, but I think I've at least made it less math intensive. Here is the simplified equation:

C = [(ILOAD x t)/P-PRIPPLE] x 106

where

ILOAD = the DC output current; it is calculated using ILOAD = EOUT/RLOAD

P-PRIPPLE = the acceptable peak-to-peak DC output ripple voltage

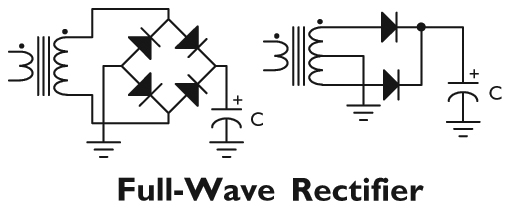

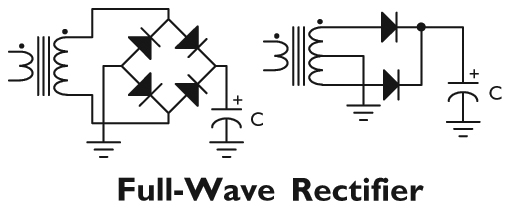

So far, so good. Now comes t, which is the hard part because it depends on the rectification method used and the line frequency. The capacitor requirements are less strict if you use full-wave rectification.

t = 1/(2 x line frequency); t = 0.0083 for 60 Hz and 0.01 for 50 Hz

How about an example? Let's design for five volts out at one amp, with 100-mV peak-to-peak ripple, running from a 60-Hz wall-wart. Plugging these values into the equation nets a capacitance value of 8300µF. An off-the-shelf 10,000µF cap will work perfectly.

C = [(1A x .0083)/0.1V] x 106

C = [.0083/0.1] x 106

C = [.083] x 106 = 8300µF

Comments